I recently had a bit of fun with a client who runs a huge, hulking forum on an old version of vBulletin.

Forums can be a lot of fun to work with. However, they’re prone to causing SEO headaches in terms of URL threads getting out of hand, pagination, and indexing preference which in turn all contribute to our favourite terms, “index bloat” and “crawl budget”.

This particular iteration of vBulletin and the site’s codebase in general made it tricky to effectively implement certain SEO recommendations, so there was also an element of selectivity throughout the project as a whole.

The site in question is a 20-year forum with well over a million indexable URLs. Aside from some of the key forum sections being the main traffic drivers, there was an ongoing conflict with other (newer by contrast) sections of the site that were responsible for the main affiliate and advertising revenue streams, such as product reviews, articles and interview sections. These were built out to capture search demand of people looking for “product ABC review” or “best ABC product 2022”, and a lot of work had been done to target this.

A recurring problem however was that old forum threads where users were discussing products were often competing with these relatively new static pages in the SERP, thus not really capturing the intent.

I’m not going to go fully into what the approach was for this macro issue as a whole in this post as it ended up becoming particularly nuanced depending on the situation (said forum URLs were still a massive traffic driver in many cases, for example) though keep this top of mind as I continue here.

Flushing Out Old Forum Content From the Index

One of the discoveries off the back of the various indexation conundrums during this project was identifying old forum threads that fulfilled a certain criteria for deindexing.

This was targeted at URLs that fulfilled all three of the following:

- Posts that were inactive for a certain period of time

- Posts that had no responses after a certain period of time

- And crucially: posts that generated a carefully-agreed threshold of little to no organic traffic over a certain period of time and had no existing keyword rankings.

Think of it as culling old legacy content that had little use to Google (and likely the user) and was, as identified in server logs, wasting our time SEO efforts-wise.

Thanks to the structure of the site and the nature in which old forum threads were handled in the hierarchy of the bulletin board, they were often accessed through various strings of 302s and 301s across the site which added to our problems.

We eventually discovered over 200,000 forum URLs that hit the above criteria, however mass deletion and redirection wasn’t an option. The forum moderators didn’t want to anger their user base by removing loads of content and this would also cause a lot of broken journeys across the site.

Creating robots.txt rules was problematic too as these threads came from various forum sections and subfolders across the site, and URL modifying for these specific examples would again cause a spike in broken/redirected journeys across the site.

Affirming Noindex

The first logical step here was to noindex. This was agreed on and noindex tags were mapped quickly to these URLs over the course of a week or so.

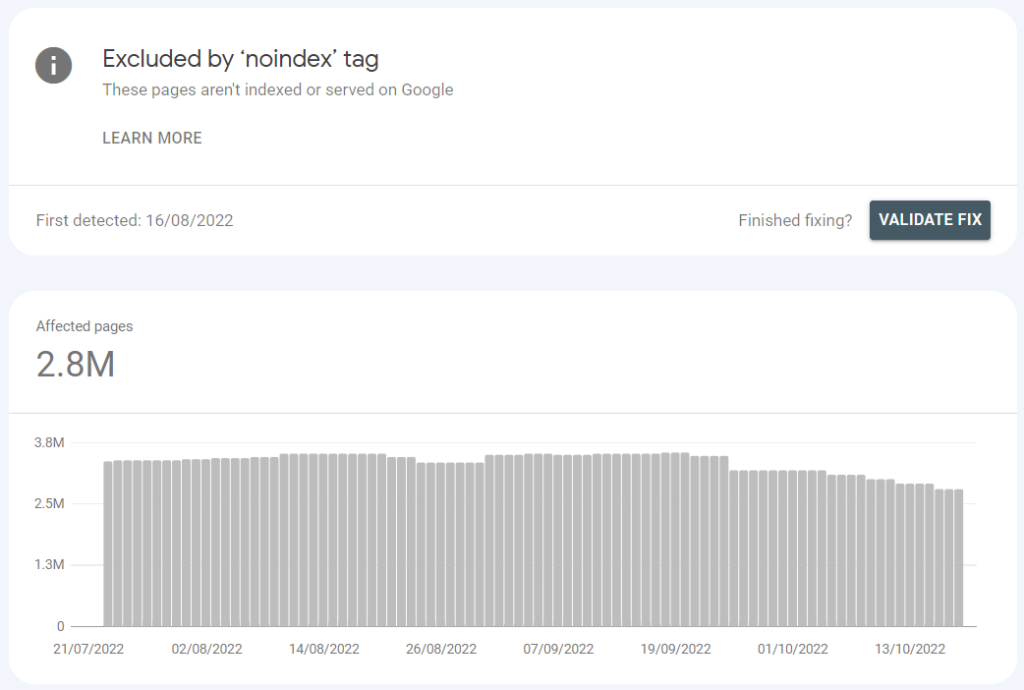

Interestingly, the trend wasn’t picked up by Google Search Console (see screenshot below) which did create some mild anxiety as to “what have we actually done to these 200,000+ URLs” but as always manual affirmation eased some nerves.

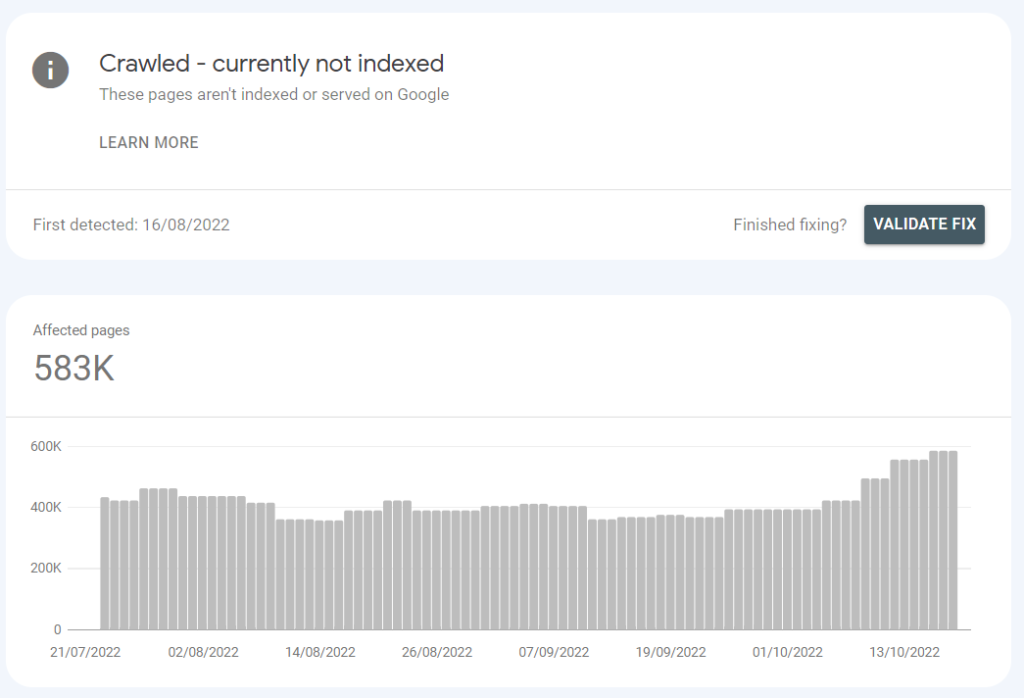

But anyway, I digress and of course, our process caused a spike in “crawled – currently not indexed” in Search Console roughly to the tune of 200,000 URLs.

While we may have been starting our journey to address some of the problems with indexation of poor content, the crawl budget issues remained.

Deterring Crawlers Through Mangled Journeys

So what were next steps to deter Googlebot from crawling these disused threads that were so integral to this site’s infrastructure? Well as it stands this is an ongoing experiment though I’ll walk you through some of the processes used:

Step 1: Refreshing Sitemaps

As I came into the project, the site only had one sitemap that represented only 8,000 URLs, despite a total of over one million indexed URLs (down to about 750,000 after our tweaks and at the time of writing).

Following this project as well as a number of other initiatives across the site, we generated new sitemaps that were capped at 30,000 URLs for different areas of the site and its forum sections.

Crucially for the forum sections, dynamic sitemap rules were applied to ensure that URLs that fulfilled the aforementioned noindex criteria weren’t included, and that desired indexed URLs emerging from forum activity were. A good rule of thumb is to re-run a manual audit of these sitemaps perhaps every month or so to double-check.

Step 2: Fabricating Orphan Journeys

Now, this was the arduous bit. Once we’d established these pages were visited by Googlebot and noindex was respected, ensuring that Googlebot was deterred to crawl these again was our next move.

The nature in which these (and many other) forum threads are structured within the framework of the master forum (think countless internal, user profile and breadcrumb links among others) meant that particular attention had to be applied.

The goal here was to deliberately orphan these pages where possible. The quickest way of establishing the first steps to doing this was to use Screaming Frog to grab all the internal links pointing to these pages.

Where there were internal links to these pages elsewhere in the body content of the site, we did our best to remove these; and where there were links to them in areas such as breadcrumbs or through forum paginated pages we added nofollow (think Forum A, page 15, link to X thread made nofollow).

While was a rather hackneyed approach it ensured that for the most part, the user journeys were preserved. Conversely, the developer by this stage was at breaking point.

Is there a step three? Probably. But I would say for now just rinse, repeat, check your server logs and crack on.

A Note on Breadcrumbs & Orphan URLs for Large Forum Sites

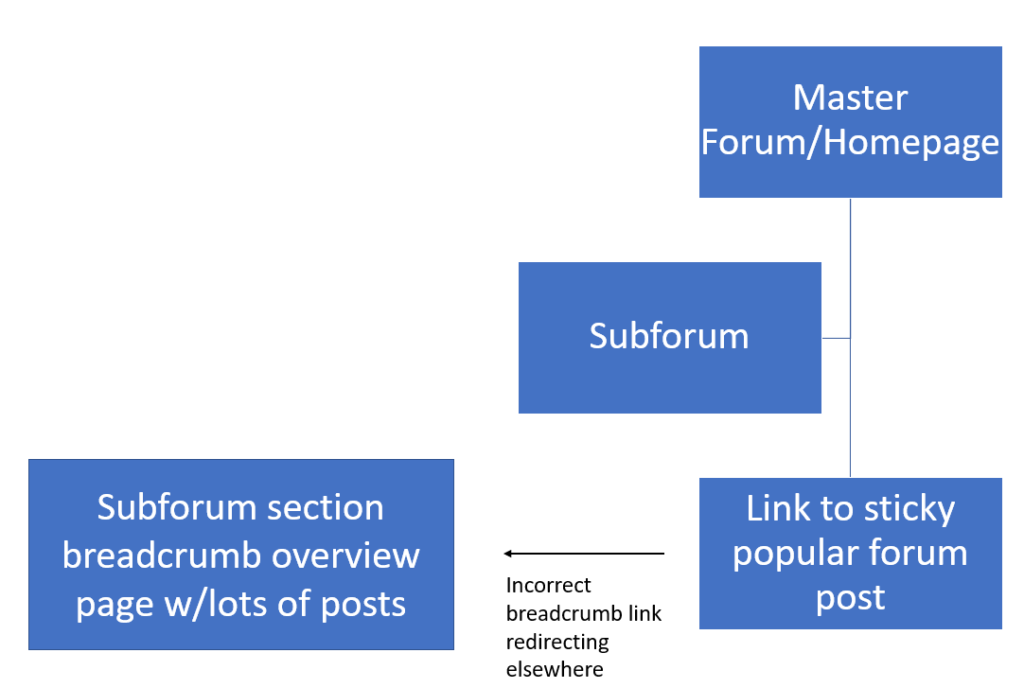

Aside from this, one of the first things I discovered on the site in question was a bounty of orphan URL issues. At the early stages of my auditing, another brilliant crawling software tool (in this case Sitebulb) flagged up over 93,000 orphaned URLs that were often top-commented and visited user forum posts (the stuff you might want indexing and ranking) that stick to the top of any given sub-forum based on popularity.

This was caused by a variety of redirect hops throughout the breadcrumb structure in various subforums that was taking Googlebot (and the user) often back to the master forum page.

Clicking on these navigated you to a specific post URL within a subforum section, and the breadcrumb link back to the main subforum in question would either redirect to the forum homepage or elsewhere, often causing orphan issues with the rest of the content housed under that “mid-tier” section of the forum breadcrumb:

That was a mouthful, so see the below journey to get what I mean:

While this may have been specific to the site in question, the chop-and-change nature of forum sites does mean you have to keep on top of your breadcrumb and internal linking structure in general. Beware of crawl traps too.

Conversely, why not try doing this in reverse to address the main point of this blog and see what happens?

Any Results or Was This Just a Huge Waste of Everyone’s Time?

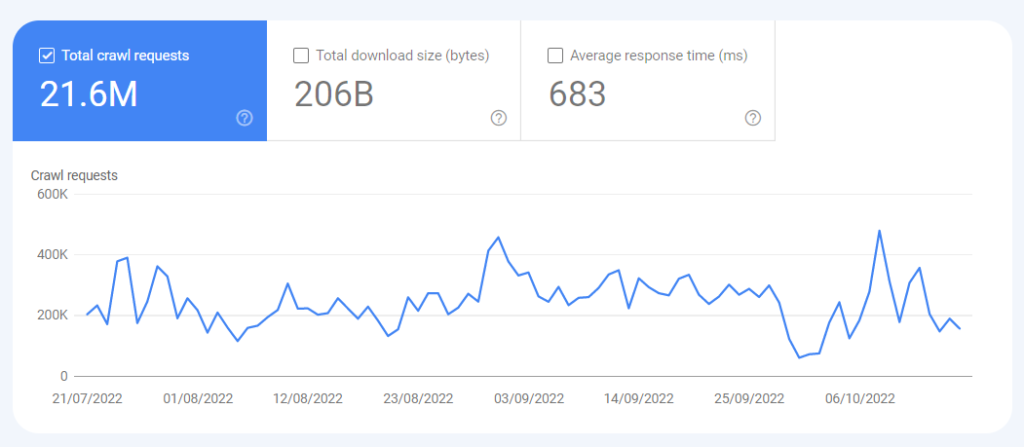

Once the fresh sitemaps were submitted we saw an expected spike in crawl rate across the site a week or so later (see early October). This was done in tandem by switching on CloudFlare’s Crawler Hints tool, with the IndexNow protocol signaling updates to Google to changes to content. This is useful for gargantuan sites like old forums on ancient CMSs that can take a while to see things move.

Even though we’ve seen our desired basket case content drop out of the index and have deliberately averted passing PageRank to them in most cases, it’s likely too early to assess tangible benefits in terms of:

- Log files showing what we want to them to show

- Value content being given the time of day

- Helping with our overall goal of steering the titanic and moving traffic to core revenue pages* (although the site is still predominantly a forum).

*There has been a nice uplift on these static-type pages in lieu of some of this work though some of this is attributed to other improvements we’ve been making on these areas in general.

In summary, I’d recommend frequent housekeeping of this order (if you have the time) or at very least, if you’re coming into an audit of a big forum site like this, perhaps consider it as an early priority.